Graph informed linear-triangular transport

14:00 at NERSC Copernicus lecture room.

Talk by Berent Lunde (Equinor).

Abstract

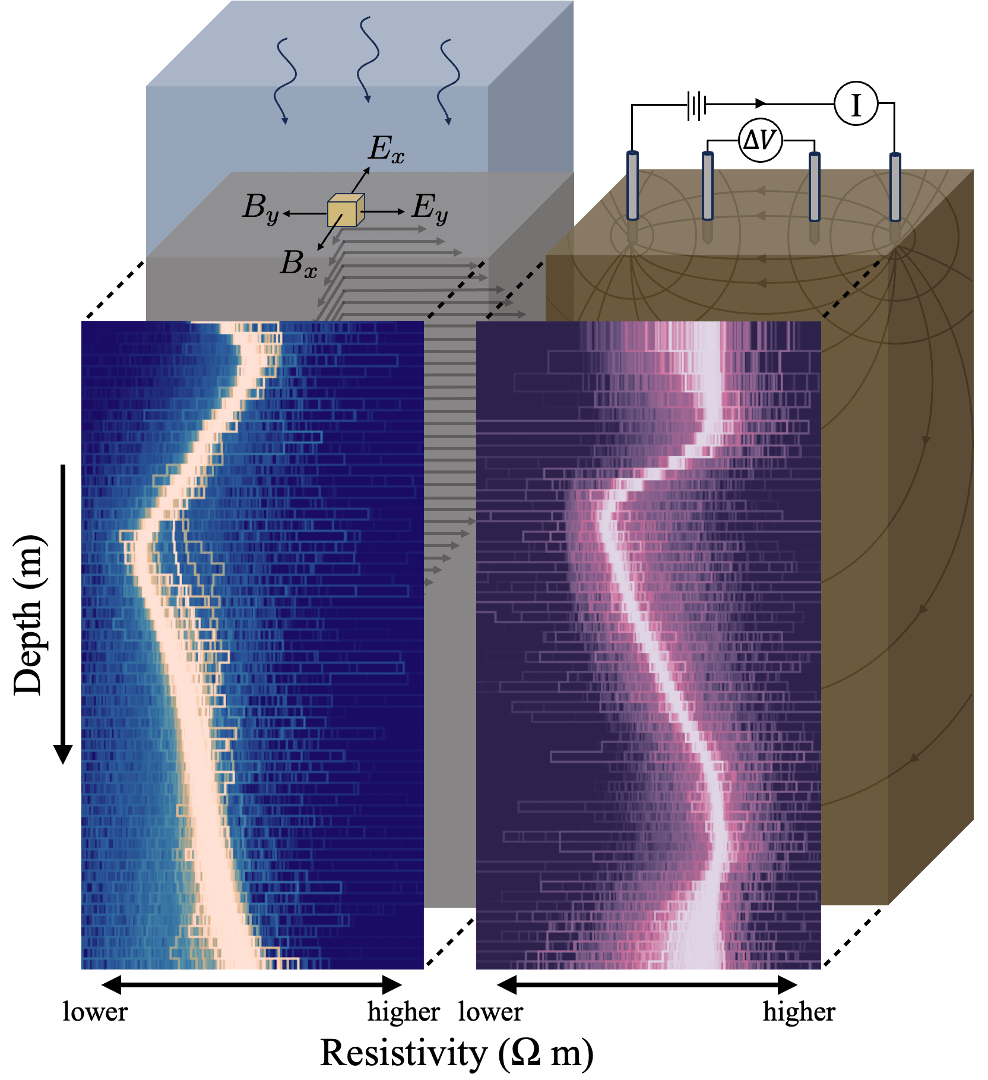

Does EnKFs trivially converge at an infinite ensemble size? No. When state evolution is governed by SPDEs, we enter the realm of infinite dimensions, where the curse of dimensionality looms large. SPDEs also enter as (sometimes approximate) descriptions of Gaussian fields. We seek ensemble-based data assimilation that overcome these high-dimensional hurdles, without falling prey to spurious correlations.

Thinking statistically: We seek minimization of Kullback-Leibler divergence for our model on the data. Unfortunately, as resolution in physics simulations and space-discretization improves (good), the KLD and statistical estimation deteriorates (bad).

Thinking physically: Drop a stone in the sea, and information (the wave) will propagate through the medium (the sea). Unfortunately, the family of covariance matrices we typically explore admit teleportation of information..

Informing the statistical estimation about structure (e.g. information propagation) makes it well conditioned. Exploring only a meaningful subset of all dependence structures, the KLD improves and in some sense we adhere to physics. This involves tools such as graphs, Markov properties, triangular maps, sparse learning algorithms and sparse linear algebra, some reformulation of update equations, and bargaining with SPDEs.

The end result comes at no cost (practically) to reservoir engineers in Equinor. While understanding of the update can be significantly improved due to decompositions of dependence. So users will be happy. Remus will be happy, because 1. statistical dependence estimation now converges, 2. distance based localization is dominated, 3. the “localization” effect is truly adaptive to the data.

And it all scales.